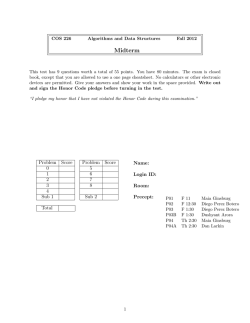

answers to questions